Artificial Intelligence (AI) represents many possible commercial opportunities for companies.

We’re seeing the development of robots that can intelligently do work, we’re seeing bots for calculating probabilities or responding to requests, and we’re seeing experimentation in all things autonomous.

Commercial applications are often fairly clear – many companies will jump at the chance to streamline processes and cut out costly or error-prone human intervention. The thing is, we’re not yet at the point where AI is developed enough to move forward without humans at the helm.

It’s also important to look at AI through the lens of what governments and different nations desire from it. Big issues in this area include using AI within the judicial system, the military and for collecting intelligence.

Ethical questions abound – where does the application of AI stray into ethical mud? What should we be considering as this technology rapidly develops?

Machine learning and bias

A Cambridge University Press paper raises an important scenario. Let’s say a bank uses an algorithm developed with machine learning to recommend mortgage applications for rejection or approval:

“A rejected applicant brings a lawsuit against the bank, alleging that the algorithm is discriminating racially against mortgage applicants. The bank replies that this is impossible, since the algorithm is deliberately blinded to the race of the applicants. Indeed, that was part of the bank’s rationale for implementing the system. Even so, statistics show that the bank’s approval rate for black applicants has been steadily dropping.

Submitting ten apparently equally qualified genuine applicants (as determined by a separate panel of human judges) shows that the algorithm accepts white applicants and rejects black applicants. What could possibly be happening?

Finding an answer may not be easy. If the machine learning algorithm is based on a complicated neural network, or a genetic algorithm produced by directed evolution, then it may prove nearly impossible to understand why, or even how, the algorithm is judging applicants based on their race.”

The thing is, this potential for bias is not just a theoretical fantasy, it has actually been happening with real-life application of AI. ProPublica published an article investigating the use of algorithms in the US justice system, in order to predict the likelihood of re-offending and to recommend sentencing for individuals.

What they found was that, because we have yet to figure out how to eliminate human bias from these kinds of programs, data sets are reflecting these biases and replicating racial bias in the justice system. The story gives examples of these algorithms favoring one race over another but getting their predicted outcomes backward.

In 2014, then-US Attorney General, Eric Holder warned:

“Although these measures were crafted with the best of intentions, I am concerned that they inadvertently undermine our efforts to ensure individualized and equal justice,” he said, adding, “they may exacerbate unwarranted and unjust disparities that are already far too common in our criminal justice system and in our society.”

A glaring problem is that there have been few independent studies into these algorithms, yet there are multiple examples of judges weighting the report provided by the algorithm heavily in sentencing decisions.

Think about this same tendency toward bias with applications such as intelligence and military activity in mind. Will we have rogue, militant robots out there targeting people who are otherwise innocent?

Commercially, consider that banking example, or even insurance, or any other decision-making application. How can we eliminate bias from machine learning? This is an important question for further development.

Transparency of AI

By definition, artificial intelligence learns through machine learning and develops its own models to make assessments. There is a lack of transparency overall. How then, do countries built on democratic processes justify decisions that are based on the recommendations of AI? Transparency is a fundamental value of democracy – will people be sent to prison “because the computer says so?”

China’s top security officer announced that they will be using AI to predict terror and unrest before it even happens. It has been likened to the movie Minority Report, where the Washington DC Police were arresting people for future crimes.

While the thought of reducing terrorism definitely has appeal, this approach has brought criticism over state control. Does this sort of use of AI impinge on people’s privacy? What about the potential for it to be completely wrong?

Some cities in China are already using AI such as facial recognition technology in order to spot traffic offenses. Data tends to be shared without restriction, raising ethical issues. Every country has a different view on what is “right” when it comes to issues of data, privacy and reliance on AI – where do ethical concerns make way for practical application?

Artificial intelligence and wealth

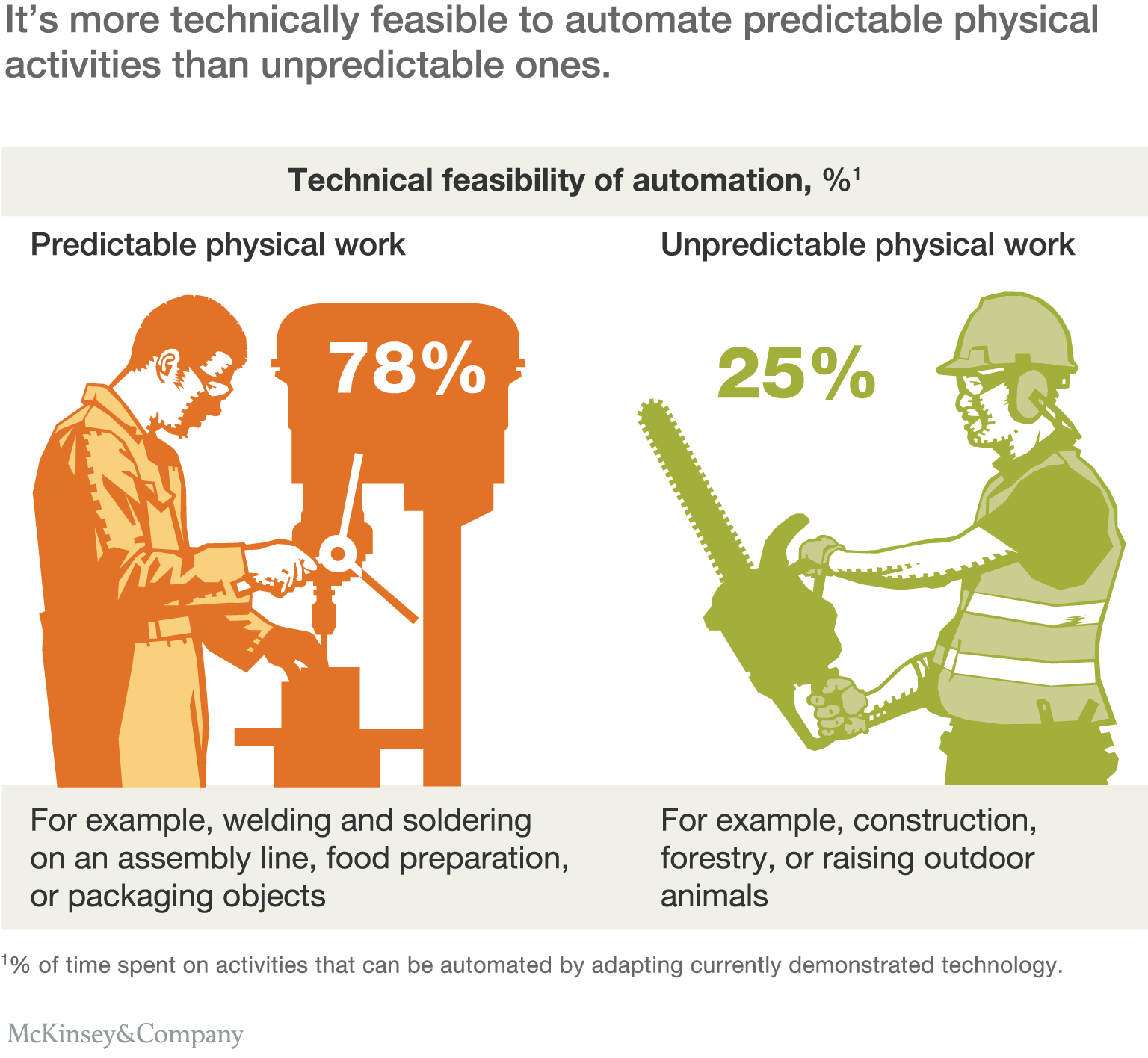

“Taking our jobs” is a common concern when it comes to the development of AI. Our economies are traditionally based upon compensation for contribution to the economy. This is usually an hourly wage or salary, but what happens as tasks that were the domain of humans are given over to AI?

A concern is that the owners of the AI now collect and control most of the revenue, with fewer human operators to pay for work. What happens to the vast majority of people who rely on salary or wages to get by? How do we structure a “post-labor” economy fairly?

Discussions such as universal basic income are coming into play, with strong views for and against. There are no easy answers – any job with a high level of predictability is ripe for being taken over by AI, as this McKinsey & Company image shows below.

Potential for mistakes

Whether human or machine, intelligence comes from learning. As humans, we learn from any scenario, including our mistakes. The issue with machine learning is that, while it goes through a period of training for different scenarios, it would be virtually impossible to cover every single combination of scenarios that require a decision to be made.

The fatal accident involving a self-driving Uber vehicle which hit a pedestrian highlights this potential for mistakes. At the time of the crash, the driver who would usually be engaged and ready to act, was looking down. The car failed to detect the pedestrian at all and did not slow down, or try to avoid hitting her.

Perhaps AI will learn from mistakes, but there’s a real issue if those mistakes prove to be fatal. Research shows that AI can be fooled in ways that humans are unlikely to fall for. On the other hand, humans don’t actually have to experience a fatal mistake to learn from it. We learn from stories we hear or read, or we have in-built instincts which tell us “don’t poke the rattlesnake”, for example.

A recent article by David Glance sums the issue up well:

“Machine learning suffers from a fundamental problem in that its ability to carry out a task depends on data that is used to train it. What exact algorithm it ends up using to fulfil its eventual purpose and what features are the most important is largely unknown. With deep learning, the multiple layers that make up the overall neural network of the machine learning software make this process even more mysterious and unknown.”

Final Thoughts

Where is the intersection of commercial application of AI and ethics? We’ve really only scratched the surface here as there are multiple other potential issues where application and ethics meet.

Thus far, we have not successfully removed a tendency toward bias from AI and we’re already seeing consequences as a result. A lack of transparency and issues with privacy also dominate, while concerns over wealth distribution and safety are big issues too.

As a final thought, technologies like this often begin with good intentions. Nuclear technology was developed as a cheap power source, with implications toward people and the environment discovered later. AI represents many great commercial opportunities, but what will be the longer-term effects?

Koombea helps companies to develop transformative apps. Talk to us about how we can help you today.